Functional Analysis

My Intuitive Overview of Functional Analysis (a draft)

What is functional analysis ?

Functional analysis seems to extend the concepts of Algebra into infinite-dimensional spaces. It is actually a blend of Algebra and Topology, more particularly, it is the extension of Linear Algebra, since Linear Algebra is the most studied subject of algebra. But what exactly does functional analysis aim to do?

-

It focuses on the study of topological vector spaces and the maps between them, with some additional algebraic and topological conditions’ assumptions on these maps.

-

It explores infinite-dimensional spaces, where functions themselves become the primary objects of study. This shift was imposed because many problems in analysis, differential equations, and physics naturally lead us to consider spaces of functions, which can’t be adequately described using finite-dimensional methods.

For example …

Continuous functions on

A function space, such as

Orthogonal elements from a basis in

Each curve

No finite subset of these functions can describe all possible functions in

Delay Differential Equation (DDE)

A standard Ordinary Differential Equation (ODE) like:

defines the future state

In contrast, a Delay Differential Equation (DDE) like:

requires the value of

This means that to solve the equation, you need to know

The system now depends on an entire function (the history of

The dependency on

In mathematical terms:

- For an ODE, the state space is finite-dimensional because it is sufficient to specify the values of

- For a DDE, the state space is infinite-dimensional because the system’s future evolution depends on the entire function

The space of polynomials

The space of all polynomials is infinite-dimensional. A finite basis fails because for any largest degree

The space of square-integrable functions

The space

This space is infinite-dimensional for the following reasons:

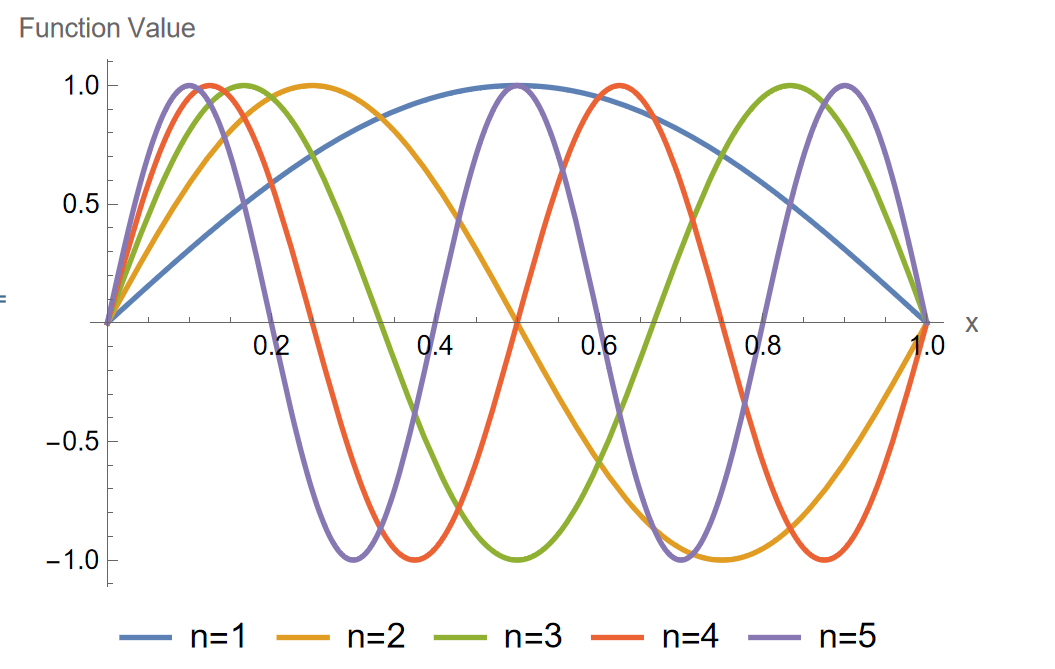

Consider the set of orthogonal functions

Suppose

But, there exist infinitely many orthogonal functions (e.g., the Fourier basis). Adding one more orthogonal function to the set would produce a contradiction, as it could not be expressed in terms of the finite basis.

So, how do we handle these infinite dimensions?

This brings us to the hierarchy of spaces in functional analysis:

-

Topological spaces: At the foundation, we have topological spaces, which introduce the notion of open sets, and in turn discuss “closeness” without necessarily defining a distance or a way to measure closeness.

This allows us to frame the more general notion of convergence of sequences, which will depend on the chosen topology. So, by changing topology, we change the way we converge, because we would change the way we frame elements (classes) in sets and how to distinguish between them. So different types of topologies, will provide different types of convergence (weak, weak*, strong) with different properties.

-

Metric spaces: By introducing a metric, we obtain metric spaces where we can measure distances between points. This is useful for defining concepts like the Contraction Mapping Principle and fixed-point theorems, which help proving the existence and uniqueness of solutions to equations such as ODEs. These concepts have applications in dynamical systems, where understanding the behavior of solutions over time is essential.

-

Normed spaces: Introducing a norm assigns a length to each vector, generalizing the absolute value on real numbers or the Euclidean norm in finite-dimensional spaces. Norms help us quantify the size of functions. In normed spaces, we can define dual spaces and study linear functionals, leading to concepts like weak convergence and optimization in these spaces.

-

Banach spaces: When a normed space is complete—meaning every Cauchy sequence converges within the space—we have a banach space. Banach spaces are fundamental because they provide the setting for many powerful theorems:

- The Hahn-Banach Theorem allows the extension of linear functionals and underpins duality principles in optimization.

- The Banach-Steinhaus Theorem, or Uniform Boundedness Principle, ensures the stability of sequences of bounded operators.

- The Open Mapping Theorem and Closed Graph Theorem help us understand the behavior of linear operators, crucial for solving operator equations.

These theorems have practical implications in solving integral equations and understanding the continuity and surjectivity of operators in functional spaces.

- Inner product spaces: By introducing an inner product, we can talk about angles and orthogonality between vectors. This leads to the Projection Theorem, fundamental in approximation theory and methods like the least squares approximation used in data fitting and regression analysis. These concepts are widely used in statistics and machine learning for modeling and data analysis.

- Hilbert spaces: When an inner product space is complete, we obtain a Hilbert space. Hilbert spaces are the playground for much of functional analysis, especially in areas like:

- Fourier series and transforms: essential for signal processing and communication systems, allowing us to decompose functions into frequencies.

- The spectral theorem: provides tools for studying linear operators, leading to applications in quantum mechanics and quantum computing.

- Weak Formulation of PDEs: By considering weak solutions, we can handle PDEs that may not have classical solutions, using methods like the Galerkin Method and Finite Element Method (FEM) for numerical approximations, which are used in engineering simulations.

But dealing with infinite-dimensional spaces isn’t without challenges. Solutions to equations in these spaces might not exist in the traditional sense or might not be unique. This leads us to the concept of weak or very weak solutions. By relaxing the requirements of a solution, we can find functions that satisfy the equations in an averaged or generalized sense. This is particularly common in fields like fluid dynamics, where classical solutions to the Euler or Navier-Stokes equations might be difficult to find or prove to be unique. So, what guarantees uniqueness in these cases?

Questions about existence and uniqueness often lead to open problems. For example, the uniqueness of solutions to the Navier-Stokes equations is one of the Millennium Prize Problems.

Researchers explore conditions, like entropy conditions, that might ensure uniqueness or stability of solutions.

When solutions exist, we often need to find them numerically. Infinite-dimensional problems are impossible to compute directly, so we use numerical methods to approximate them with finite-dimensional ones.

Methods like the Finite Element Method and the Galerkin Method discretize the problem, reducing it to solving large but sparse linear systems.

Here, functional analysis provides the theoretical foundation to study:

-

Convergence: Do these approximations converge to the true solution? If so, how fast?

-

Computational Complexity: What are the time and memory requirements?

-

Stability: Are the solutions stable under small perturbations?

At the core of these analyses is the need to develop notions of convergence of sequences of functions and to study their properties.

The concept of convergence depends on the structure of the space

- Topological spaces: It’s enough to have the notion of open sets to discuss convergence.

- Metric spaces: A metric allows us to define the distance between functions, giving a more precise notion of convergence.

- Normed spaces: Norms generalize the concept of length, enabling us to measure the size of functions.

- Inner product spaces: With inner products, we can define angles and orthogonality, leading to projections and optimization methods.

For example, in optimization and approximation, if a solution lies outside the feasible region, we can project it onto the feasible set, finding the closest point within the constraints. This projection relies on the geometric structure provided by inner product spaces.

In summary, functional analysis builds a hierarchy of spaces:

- Inner product spaces (angles) ⊂ Normed spaces (length) ⊂ Metric spaces (distance) ⊂ Topological spaces (open sets)

Each level adds structure, enabling us to tackle increasingly complex problems. By understanding these spaces and the relationships between them, we gain the tools necessary to address infinite-dimensional problems that arise in various fields.

By building upon each level of structure, we can understand and solve problems that would otherwise be intractable.